Search technology has moved beyond simple keyword matching into an era of intelligent, conversational search experiences. Central to this evolution is query fan-out, a technique that enables AI systems to break down user questions into multiple sub-queries, delivering richer, more accurate, and context-aware answers.

Understanding query fan-out is essential for modern AI Engine Optimization (AEO) and Generative Engine Optimization (GEO). But what exactly is query fan-out, and why does it matter for both AI Engine Optimization (AEO) and Generative Engine Optimization (GEO)?

“For content creators, query fan-out changes the game. You’re no longer writing for a single keyword, you’re writing for the ecosystem of questions surrounding it.”

— Elena Marquez, Head of SEO Strategy, InsightEdge Digital

Let’s break it down in plain language and see why it’s becoming essential for anyone creating content in the age of AI.

The Age of the Digital Concierge

The Problem with Old-School SEO:

Old-school SEO, focused solely on ranking for individual keywords, is no longer sufficient. Modern search engines act as digital concierges, guiding users through conversational journeys. Creating content that addresses only a single query risks missing the broader spectrum of user intent and follow-up questions.

Today’s search engines don’t just find answers – they act as digital concierges, guiding users through a conversational journey. This is where query fan-out comes into play. Instead of treating a user’s question as a standalone request, search engines now view it as the starting point of an ongoing dialogue.

Query fan-out is the process where an AI system takes your original question, breaks it down into multiple related questions, gathers information for each one, and combines everything into a comprehensive response. It’s like having a super-smart assistant who not only answers your question but anticipates what you’ll ask next.

When someone searches for “best coffee makers,” they’re not just looking for a list of products. Their query naturally fans out to include:

- How much do they cost?

- What features matter most?

- Are there any current deals?

- How long do they last?

This shift means we need to rethink how we create content. It’s no longer about winning a single keyword battle but providing the most helpful, comprehensive resource that addresses the full spectrum of a user’s information needs.

Understanding query fan-out in search engines

This approach was popularized by Google when they introduced Google AI Mode, their conversational AI interface within Google Search. Other major AI systems like ChatGPT also employ this technique to deliver more comprehensive answers.

What makes query fan-out so powerful is its ability to handle complex, multi-faceted questions that might not have been directly answered anywhere online before. By breaking down your question into smaller parts, the system can gather information from various sources and synthesize a unique response.

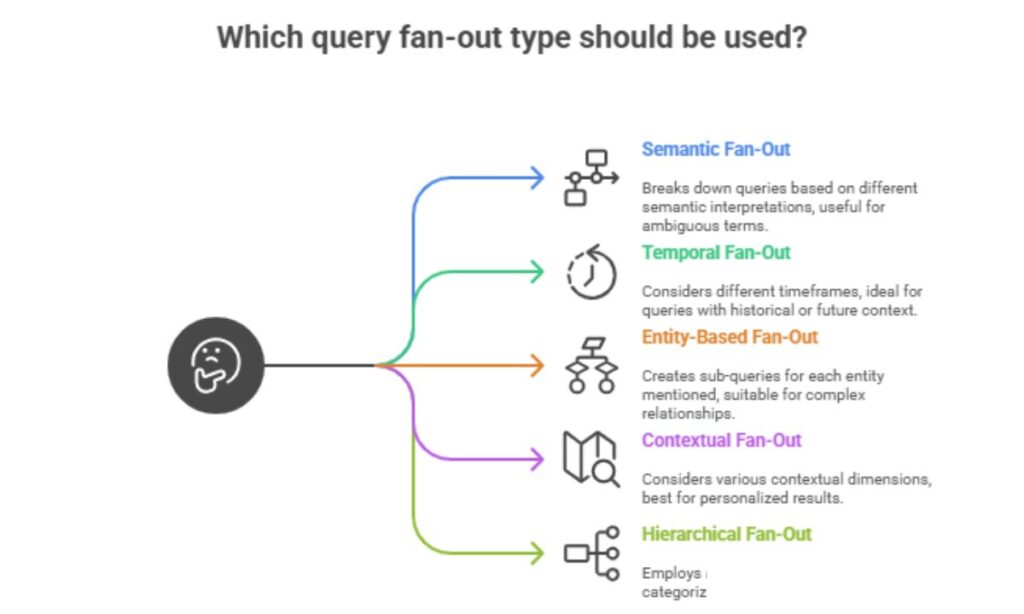

Exploring Query Fan-Out Types for AI & GEO

AI and generative engines employ several distinct types of query fan-out techniques:

| Type | Description |

| Semantic Fan-Out | Breaks down queries based on different semantic interpretations (e.g., “banks” could mean financial institutions, river banks, or data banks) |

| Temporal Fan-Out | Considers different timeframes for a query (current, historical, and future perspectives) |

| Entity-Based Fan-Out | Creates separate sub-queries for each entity mentioned and their relationships |

| Contextual Fan-Out | Considers various contextual dimensions like location, user intent, device type, or search history |

| Hierarchical Fan-Out | Employs a tree-like structure where queries branch into categories and subcategories |

These different approaches can be used individually or in combination, depending on the complexity of the query and the capabilities of the AI system.

How query fan-out improves result diversity and speed

Query fan-out significantly enhances both the diversity and speed of search results in several ways:

- Enhanced Result Diversity: By pursuing multiple interpretations simultaneously, query fan-out naturally captures a wider range of perspectives, preventing “filter bubble” effects

- Parallel Processing Advantages: Modern search infrastructures process multiple sub-queries in parallel rather than sequentially, dramatically speeding up response times

- More Accurate Relevance Scoring: When results are gathered from various fan-out branches, the system gains additional context for determining relevance

- Reduced Processing Overhead: Rather than running extremely complex queries, fan-out allows systems to run multiple simpler queries that are often more efficient

- Improved Caching Efficiency: Sub-queries are more standardized and common, increasing the likelihood that some results are already cached

AEO Context

In AI Engine Optimization (AEO), query fan-out transforms how we approach informational content. Let’s see this in action with a practical example.

The old approach to a query like “What is a geothermal heating system?” would create a straightforward definition post. You’d explain what it is, add some basic details, and call it a day. But that’s not how people actually consume information.

With query fan-out in mind, we understand that this initial question is just the tip of the iceberg. When someone asks about geothermal heating, their mind is already racing ahead to several follow-up questions:

- “How exactly does it work?” – They want to understand the technology

- “What are the advantages and disadvantages?” – They’re weighing alternatives

- “How much will it cost me?” – They need to know if it’s financially viable

- “Are there tax credits or rebates available?” – They’re looking for ways to offset costs

- “How long does installation take?” – They’re planning their timeline

In the AEO context, query fan-out means creating content that proactively addresses this entire constellation of questions. Instead of making users bounce between multiple pages, you provide a single, comprehensive resource.

This doesn’t mean writing an enormous article that nobody will read. Instead, it means creating well-structured, modular content with clear headings that allow both users and AI systems to easily find answers to specific questions.

For AI engines, this approach signals that your content is thorough and authoritative. It increases the likelihood that your content will be used as a source when AI systems construct their responses to user queries.

Why query fan-out enhances model predictiveness and recall

Query fan-out substantially improves both predictiveness (anticipating valuable information) and recall (finding relevant information) in AI models.

From a predictiveness standpoint, query fan-out allows AI engines to consider multiple interpretations simultaneously. The system isn’t locked into a single prediction about user intent. By exploring various possibilities in parallel, the AI can identify the most likely valuable information across different scenarios.

For recall capabilities, traditional single-query approaches often miss relevant information that doesn’t precisely match the query terms. By fanning out to related concepts and alternative phrasings, AI engines cast a wider net that captures information expressed differently.

For instance, a medical query about “heart pain” fanned out might include searches for “chest discomfort,” “cardiac symptoms,” and “angina” – terms a user might not know to search for directly but that contain vital information.

Evaluating fan-out limits for better system performance

While query fan-out offers powerful advantages, determining optimal fan-out limits requires careful calibration:

- Diminishing Returns Threshold: Every additional sub-query comes with computational costs but may not proportionally increase result quality

- Query Complexity Analysis: Simple, unambiguous queries might require minimal fan-out, while complex ones benefit from more extensive branching

- Real-time Performance Monitoring: If certain branches yield high-quality results quickly, resources may be dynamically reallocated to those paths

- User Context Considerations: Mobile users might benefit from more focused fan-out, while desktop users conducting research might prefer broader exploration

- Resource Allocation Models: Sophisticated systems implement economic models that allocate resources to branches expected to deliver the highest “return”

Balancing accuracy and resource cost in AEO systems

The fundamental challenge in AI Engine Optimization is balancing the improved accuracy from extensive query fan-out against the increased resource costs:

Computational Resource Management

Query fan-out multiplies the processing requirements roughly linearly with each additional branch, impacting:

- Server costs

- Energy consumption

- Response time

- System capacity during peak loads

Intelligent Branch Pruning

Rather than rigid fan-out limits, sophisticated systems implement dynamic pruning strategies:

- Low-confidence branches are terminated early

- Redundant paths are merged

- High-yield branches receive more resources

Tiered Processing Approaches

Not all sub-queries require the same computational intensity:

- Lightweight processing for initial filtering

- Medium-depth analysis for promising paths

- Deep processing only for the most valuable branches

GEO Context: Fanning Out for Generative & Creative Needs

Generative Engine Optimization (GEO) takes query fan-out into even more exciting territory. Here, we’re dealing with creative prompts that generate new content rather than just retrieving information.

Consider someone using an AI image generator with the prompt “Generate an image of a spaceship.” The traditional approach would focus on optimizing content around that exact prompt. But this misses the rich creative context that exists in the user’s mind.

In reality, that simple generative prompt fans out into numerous implicit creative dimensions:

- Style preferences: Is the user imagining a photorealistic spaceship? A stylized sci-fi illustration? A retro 80s-inspired design?

- Compositional elements: Is the spaceship flying through deep space? Docked at a station? Engaged in a battle?

- Mood and atmosphere: Should the image evoke wonder? Tension? Serenity?

- Technical aspects: What about lighting conditions? Camera angle? Level of detail?

In the GEO context, query fan-out means providing rich, structured content that helps generative AI understand these various dimensions. This involves:

- Creating detailed metadata and semantic tagging for your visual content

- Providing contextual information about style, composition, mood, and technical aspects

- Building a comprehensive vocabulary around your subject matter

- Organizing content into clear categories that align with how users think

For example, if you run a site with spaceship designs, you’d want to tag each image with style attributes, compositional elements, mood descriptors, and technical specifications. This rich metadata becomes the foundation that generative AI can draw from to create more nuanced, targeted outputs.

How query fan-out fuels prompt enrichment in GEO

Query fan-out revolutionizes prompt enrichment in Generative Engine Optimization by creating a multi-dimensional approach to understanding user intent:

Context Amplification

When a user provides a generative prompt like “Create a marketing campaign for sustainable products,” query fan-out expands this into multiple contextual dimensions:

- Target audience considerations

- Industry-specific sustainability messaging

- Competitive positioning

- Regulatory compliance factors

- Cultural sensitivities around environmental claims

Prompt Decomposition and Reconstruction

Fan-out enables sophisticated prompt handling by:

- Breaking complex prompts into fundamental components

- Enriching each component with relevant domain knowledge

- Recombining these enriched components into a cohesive framework

- Using this framework to guide generation

Using multi-source retrieval for meaningful outputs

Query fan-out transforms retrieval-based generation by leveraging multiple information sources:

Diverse Source Integration

Rather than relying on a single information source, fan-out enables generative engines to pull from:

- Authoritative reference materials

- Real-world examples and case studies

- Specific domain expertise

- Cultural and contextual knowledge

- Current events and trends

Cross-Validation Mechanisms

Fan-out enables powerful cross-validation where:

- Facts can be verified across multiple sources

- Perspectives can be compared for consensus or divergence

- Outlier information can be identified and appropriately weighted

Tech integrations that support smart query fan-outs

Several key technologies enable effective query fan-out implementation in modern systems:

Vector Database Architecture

Modern vector databases provide the infrastructure for efficient semantic search across fan-out branches:

- High-dimensional embedding storage

- Approximate nearest neighbor algorithms

- Clustering capabilities for related concepts

- Scalable indexing for fast retrieval

Knowledge Graph Integration

Knowledge graphs enhance fan-out by providing:

- Structured relationships between entities

- Hierarchical concept organization

- Inference capabilities for implicit connections

- Cross-domain relationship mapping

Retrieval-Augmented Generation (RAG)

RAG architectures combine:

- External knowledge retrieval

- Contextual relevance scoring

- Dynamic prompt augmentation

- Generation guided by retrieved information

A Practical “Fan-Out” Framework for Content Creators

Let’s get practical with a framework you can actually use to implement query fan-out in your content strategy:

Step 1: Identify the “Seed” Query

Start by pinpointing the primary question or prompt that users are likely to begin with. This is your entry point. “What is artificial intelligence?” is too broad. “How does AI help with customer service automation?” is much better.

Step 2: Brainstorm the “Fanned-Out” Questions

This is where you map out all the natural follow-up questions. You can:

- Check Google’s “People Also Ask” boxes for related topics

- Use keyword research tools to find related questions

- Review forums like Reddit or Quora to see what real people ask next

- Interview customers or subject matter experts

- Put yourself in the user’s shoes and think about what you’d ask next

Step 3: Create Modular Content Blocks

Now it’s time to structure your content. For each fanned-out question:

- Create a distinct section with a clear, question-based heading

- Provide a direct, concise answer in the first paragraph

- Expand with supporting details, examples, or evidence

- Make each section self-contained yet connected to the overall narrative

- Use appropriate formatting (bullet points, tables, etc.) to enhance clarity

Step 4: Connect the Dots with Internal Linking & Schema

Finally, reinforce the relationships between topics by:

- Adding strategic internal links between related sections

- Using schema markup to explicitly define the content structure

- Creating clear navigational elements that help users jump to specific sections

- Ensuring your content hierarchy makes logical sense

This structured approach creates content that’s perfectly aligned with how modern AI and search engines process information.

Conclusion

Query fan-out represents a fundamental shift in how AI systems understand and respond to human requests. Whether in AI Engine Optimization or Generative Engine Optimization contexts, this approach transforms simple queries into comprehensive journeys that anticipate and address users’ full spectrum of needs.

For content creators and marketers, embracing the fan-out mindset means moving beyond optimizing for single keywords to creating rich, interconnected content ecosystems. It means understanding that users don’t just want answers – they want complete solutions that address all aspects of their questions.

The most successful organizations will be those that restructure their content to serve as perfect inputs for AI systems that employ query fan-out. This means creating modular, well-structured content that clearly addresses multiple related questions while maintaining internal coherence.

As AI continues to evolve, query fan-out will only grow more sophisticated, with systems becoming increasingly adept at anticipating user needs. By implementing the principles outlined in this guide, you’ll be well-positioned to create content that thrives in this new landscape – content that doesn’t just answer questions but truly serves users’ comprehensive information needs.

FAQs

How does query fan-out improve generative engine optimization?

Query fan-out enhances generative engine optimization by expanding simple prompts into multiple dimensions that capture the full context of what a user wants to create. It helps AI understand style preferences, compositional elements, mood, technical aspects, and other creative dimensions not explicitly stated in the original prompt. This comprehensive understanding leads to outputs that more precisely match users’ creative intent, even when they don’t know how to articulate all the specific parameters they want. Additionally, by retrieving information from multiple relevant sources, query fan-out ensures generated content is grounded in diverse, high-quality reference materials.

Is query fan-out a required feature for modern AI engines?

While not technically required, query fan-out has become virtually essential for competitive, high-quality AI engines. Without it, AI systems are limited to answering precisely what was asked rather than what the user truly needs to know. Simple, single-path processing can work for basic, unambiguous queries, but falls short with complex, nuanced, or ambiguous requests. As user expectations for AI capabilities grow, query fan-out has transitioned from a nice-to-have feature to a core component that defines the difference between basic search functionality and truly intelligent assistance.

What are the best practices to manage query fan-out efficiently?

Efficient query fan-out management requires several key practices:

- Implement dynamic depth control that adjusts fan-out based on query complexity

- Use early termination for branches that show low promise

- Prioritize computational resources for high-potential paths

- Develop smart caching strategies for common sub-queries

- Employ distributed processing to handle multiple branches in parallel

- Create feedback loops where results from early branches inform later exploration

- Design tiered processing approaches that apply different levels of analysis based on branch potential

Can query fan-out affect performance metrics in AI engine optimization?

Query fan-out significantly impacts key performance metrics in AEO systems, both positively and negatively. On the positive side, it typically improves accuracy, comprehensiveness, and user satisfaction metrics by providing more complete answers that address the user’s full information needs. It can also improve handling of ambiguous or complex queries where single-path approaches often fail. However, without proper optimization, fan-out can negatively impact response time, computational cost, and system throughput due to the increased processing requirements. The key is finding the right balance that maximizes quality improvements while minimizing performance penalties.

Does query fan-out apply to conversational AI applications?

Query fan-out is particularly valuable for conversational AI applications. In conversational contexts, understanding is often built across multiple turns of dialogue, with important context implied rather than stated explicitly. Query fan-out helps conversational systems:

- Maintain context across conversation turns

- Anticipate likely follow-up questions

- Understand implicit references and requirements

- Handle the natural ambiguity of conversational language

- Provide comprehensive answers that reduce the need for multiple back-and-forth exchanges

Leading conversational AI systems like ChatGPT, Claude, and Google’s conversational search heavily leverage query fan-out to create more natural, helpful interactions that feel less like querying a database and more like speaking with a knowledgeable assistant.

Ridam Khare is an SEO strategist with 7+ years of experience specializing in AI-driven content creation. He helps businesses scale high-quality blogs that rank, engage, and convert.