Groups handle large volumes of text data. Content analysis offers an organized way to interpret this content and find key insights. It plays a key role in many studies by identifying trends and connections in qualitative or quantitative content. Researchers who want stronger findings and a richer understanding of data often include content analysis in research. The following sections explore methods to align your analysis with broader targets, adopt tools for faster processing, and validate outcomes with careful checks. These techniques can greatly strengthen the broader impact of content analysis in qualitative research and help you adapt easily to multiple content analysis approaches.

Align Your Analysis with Research Goals

Every research project clearly benefits from a clear plan that links content analysis approaches to specific goals. Researchers must begin by making clear their central questions and deciding whether they aim to explore meaning or test established theories. This ensures they target the right variables or themes. For example, a linguistic study of social media comments might track repeat words to measure sentiment, while a historical analysis might concentrate on collating details about events in various archived texts. Researchers who use content analysis in research also set inclusion criteria grounded in scope and relevance. In qualitative settings, the goal might be to interpret nuanced patterns, whereas quantitative approaches often measure frequency or correlations. By mapping these aspects, the process remains aligned with the original purpose. Well-defined research aims safeguard the integrity of each phase, from data gathering to final explanation, and help maintain a fully, organized, credible approach to content analysis.

Much content analysis research is motivated by the search for techniques to infer from symbolic data what would be either too costly, no longer possible, or too obtrusive by the use of other techniques.

— Klaus Krippendorff

Relational Analysis

Relational Analysis fully examines how concepts link and shape meaning within texts. Researchers might compare multiple versions of a historical manuscript or detect shared phrases across different sources. This approach finds subtle parallels and differences. For examples of sophisticated digital methods, visit OpenMethods, which showcase different uses.

Match analytical techniques with the study objective

Researchers must connect each aim with suitable analysis methods. A project examining group differences might use ANOVA, while a text-oriented study might highlight code frequencies. This alignment helps address targeted questions, ensures strong data relevance, and upholds clear logical links between questions, hypotheses, and chosen analytical paths.

Integrate theories to guide interpretation

Including a theory-based approach clarifies why events arise. One source states that “A theoretical framework provides the theoretical assumptions for the larger context of a study”. By anchoring codes in theory, researchers interpret text more consistently and well.

Adjust methods for content analysis in qualitative research

In qualitative contexts, methods must shift beyond simple tallies to interpret deeper meaning. One study calls this approach “a research method for the subjective explanation of text data”. Researchers refine code definitions, track emergent themes, and consider context to maintain correctness. This encourages richer explanatory findings.

Adopt Technology to Speed Up Content Analysis

Researchers often handle large datasets. Effective tools can reduce manual sorting and shorten overall project timelines. Spreadsheets and basic text editors might work for small volumes, but sophisticated software offers automated coding, frequency tracking, and graphing. These functions free analysts to spend more time interpreting results. Artificial intelligence has also opened new options, from quick data structuring to real-time pattern detection. For example, many platforms now apply machine learning to highlight unexpected themes in open-ended responses. This helps find overlooked trends without exhaustive scanning. Cloud solutions add team-based functions, so multiple team members can code at the same time while preserving consistent versions of the dataset. Encryption and access controls protect sensitive information, an notably urgent concern in some research fields. Adding tools allows teams to improve their approach on the fly, test new queries, and switch between quantitative and qualitative outputs. Such ability to adjust makes content analysis faster, more scalable, and more precise.

Use content analysis tools for data structuring

Researchers transform raw text into coded sets. One definition describes content analysis as “Any technique for making inferences by in an ordered way and without bias finding special features of messages” (Columbia University Mailman School of Public Health). This structure then allows for deeper exploration and grouping and highlights critical patterns.

Employ AI-based tools for large datasets

AI-based methods handle ever-expanding data volumes. One report found that “54% of businesses say using AI led to cost savings”. Machine learning quickly sifts data, flags unusual items, and supports real-time monitoring. This speeds analysis and eases the burden of manual coding. It also aids teamwork.

Track themes efficiently through software

Software builds coded groups, sums keyword frequencies, and pinpoints trending points. One provider notes “Thematic analysis software can save your team hundreds of hours”. By highlighting repeating ideas, tools help researchers focus on the most important themes before final explanation. They also combine scattered data sources.

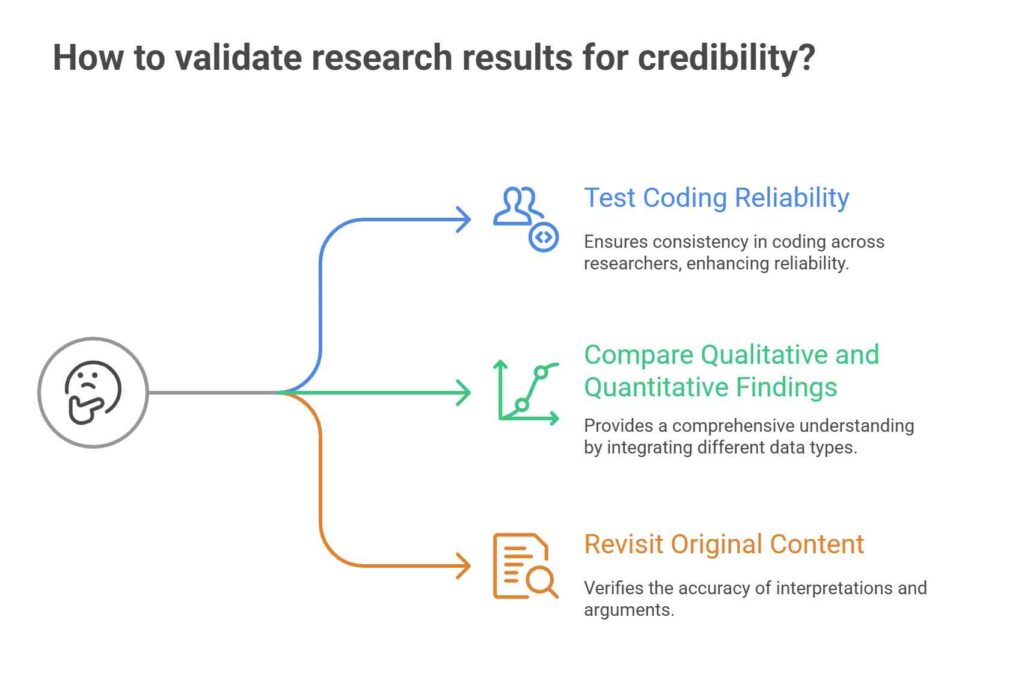

Validate Results for More Credible Insights

Once coding is complete, researchers need to confirm that their findings are both accurate and free from bias. Double-checking data groups, verifying sample sizes, and checking external sources can prevent misreading. Trustworthy findings come from full review of each coding step. Some teams compare early findings with earlier drafts of the texts or additional literature to see whether identified themes match broader patterns. Additional check might involve numerical measures, such as link tests, to see if qualitative codes match with quantitative outcomes, like survey data. Peer reviews or team-based coding sessions also strengthen results. In such setups, different individuals on their own examine the same dataset, then discuss any differences in explanation. When the final agreement remains consistent, researchers gain confidence in the study’s trust and soundness. Ultimately, these review processes protect the integrity of the entire project, making the final conclusions more trustworthy across different uses of content analysis.

Test coding reliability across researchers

Teams achieve consistency by comparing how different coders label identical data. One author highlights that “strong agreement among coders determines the quality of the study”. This process surfaces differences and sparks discussion, which refines codebooks and raises confidence in final outputs and encourages a clear workflow.

Compare qualitative coding with quantitative findings

Combining words and numbers strengthens research outcomes. One source states, “Quantitative research is expressed in numbers…qualitative research is expressed in words”. Matching both clarifies how coding matches trends in numeric surveys, experiments, or observed data. By comparing the two, researchers verify that thematic groups reflect countable shifts.

Revisit original content to argument-check

Researchers often return to the initial text to confirm that coded findings still match with the source. This process reveals any mislabeling or fresh findings that might appear. Double-checking each theme’s origin ensures arguments remain consistent and well grounded in the analyzed data.

Conclusion

Effective content analysis combines clear goals, targeted methods, and well-checked findings. Researchers who define precise goals and consistently matched their toolset with these aims produce accurate explanations. Digital solutions accelerate large-scale coding tasks by making automatic message grouping, frequency counting, or sentiment mapping, which reduces repeated labor. Yet tools alone do not guarantee strong outcomes. Analysts must verify coding consistency through cross-checks and compare qualitative themes with quantitative results for added proof. Going back to the source content is also critical, ensuring each conclusion is anchored in real data. This balanced approach to content analysis builds trust among peers and stakeholders who depend on trustworthy findings. Researchers who follow these practices are better prepared to handle various data sources, whether text, images, or more complex records, with clarity and precision. They strengthen research quality and remain flexible to inquiry demands.

FAQs

This section addresses common questions about content analysis. Readers often ask about its primary goal, how qualitative and quantitative approaches compare, the core methods, automation options, and ways to measure correctness. Below are concise answers for quick reference and clarity.

What is the main purpose of content analysis in research?

How does content analysis in qualitative research differ from quantitative?

Which are the key types of content analysis methods?

Can content analysis be automated with tools?

How do you measure the accuracy of content analysis results?

Ridam Khare is an SEO strategist with 7+ years of experience specializing in AI-driven content creation. He helps businesses scale high-quality blogs that rank, engage, and convert.