SEO problems can quietly sabotage your website’s performance without warning. One day you’re ranking well, the next your traffic has plummeted. These issues range from technical glitches to content problems that confuse search engines. The good news? Most SEO problems have straightforward fixes once you know what to look for. Let’s walk through the most common SEO issues and provide practical solutions to restore your search visibility and stop losing valuable traffic to competitors.

Google only loves you when everyone else loves you first.

— Wendy Piersall

Identify and Resolve Technical SEO Problems

Fix broken links and 404 errors

Broken links waste crawl budget and frustrate visitors. Run a site audit using tools like Screaming Frog to find these issues. For broken internal links, you have three options:

- Restore the missing content

- Redirect with a 301 to a relevant page

- Update the link to point somewhere useful

Pay special attention to 404 pages with traffic or backlinks – these should always get proper redirects to preserve user experience and link equity.

Improve crawlability with clean site architecture

A confusing structure prevents search engines from discovering important pages. Aim for a flat site architecture where no page is more than three clicks from the homepage. Create logical categories and implement a clear internal linking strategy. Regularly check for orphaned pages and incorporate them into your site structure. Keep your XML sitemap updated and submitted to Google Search Console.

Use HTTPS and improve site speed

Security and speed directly impact rankings. If you haven’t migrated to HTTPS, make it a priority. For speed improvements:

- Compress images without losing quality

- Enable browser caching

- Minify CSS/JS files

- Consider implementing a CDN

Use PageSpeed Insights to identify specific issues and prioritize fixes that will have the biggest impact on performance metrics.

Address On-Page SEO Issues Immediately

Use unique title tags and meta descriptions

Generic titles and descriptions waste opportunities to improve CTR and rankings. Each page should have a unique title tag containing your target keyword near the beginning, with a length of 50-60 characters to avoid truncation.

While meta descriptions don’t directly impact rankings, compelling ones with clear calls to action can significantly improve click-through rates. Aim for 150-160 characters and include your target keyword naturally. For product pages, highlight unique selling points to stand out from competitors.

Remove or rewrite duplicate content

Duplicate content forces search engines to choose which version to rank, often lowering positions for all versions. Use tools like Siteliner to identify duplicated content across your site. Your options for fixing duplicate content include:

- Implementing canonical tags to indicate the preferred version

- Rewriting content to make each page unique

- Consolidating similar pages into comprehensive guides

- Properly handling URL parameters in Search Console

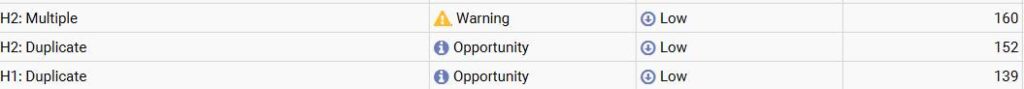

Fix missing or misused header tags

Header tags create a hierarchy that helps search engines understand your content. Each page should have exactly one H1 tag containing your primary keyword. Use H2 and H3 tags to organize content logically without skipping levels. Don’t use header tags purely for styling purposes – this confuses search engines about your content structure.

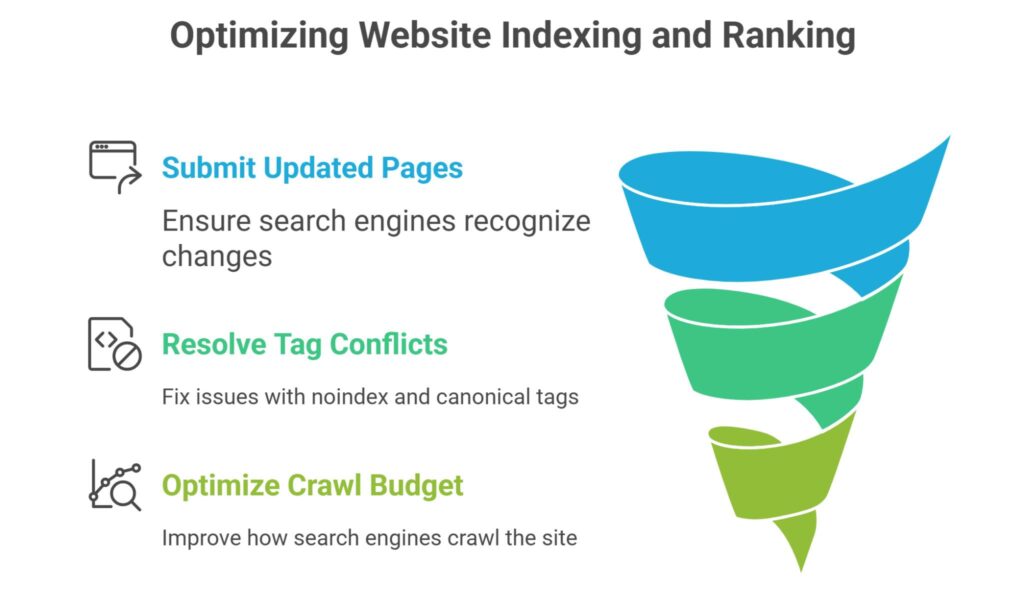

Handle Indexing and Ranking Issues Fast

Submit updated pages via Search Console

When you make significant changes, don’t wait for Google to discover them naturally – this could take weeks. Use Google Search Console’s URL Inspection tool to submit updated URLs for immediate recrawling. For larger sites, prioritize your most important landing pages and those with the highest potential traffic.

Resolve noindex and canonical tag conflicts

Conflicting directives like having both a noindex tag and a canonical tag can confuse search engines. Audit your site for pages with noindex tags to ensure they’re only on pages you genuinely want to keep out of search results. Check that canonical tags always point to the version you want to rank.

Remember that robots.txt blocks crawling but not indexing – for truly private content, use password protection instead.

Improve crawl budget through optimized robots.txt

Large sites often struggle when search engines don’t crawl all pages regularly. Optimize your robots.txt file to direct crawlers away from unimportant pages and toward valuable content. Remove any accidental blocking of resources like CSS or JavaScript files. Monitor crawl stats in Search Console to see if your changes improve crawling efficiency over time.

Solve SEO Problems Before They Harm Rankings

Staying ahead of SEO problems is far easier than recovering from penalties or lost rankings. Implement regular site audits to catch issues before they impact visibility. When you do discover problems, prioritize fixes based on their potential impact – focus first on issues affecting your highest-traffic pages or most valuable keywords.

Remember that SEO is an ongoing process, not a one-time fix. By proactively addressing these common SEO issues, you’ll create a stronger foundation for sustainable search visibility and traffic growth. The most successful sites aren’t those without problems, but those that identify and fix issues quickly before they harm rankings.

FAQs

What are the most common SEO problems small businesses face?

Small businesses typically struggle with technical issues like improper redirects and missing meta tags. Content problems include thin content and lack of keyword research. Local SEO issues are also common, such as unclaimed Google Business Profiles and inconsistent NAP information across directories. Budget constraints often lead to using outdated tactics or ignoring analytics that could identify problems early.

How do I fix technical SEO problems affecting site speed?

Compress images using tools like TinyPNG, implement browser caching, and minimize HTTP requests by combining CSS/JS files. Enable GZIP compression and consider upgrading from shared hosting. For WordPress sites, perform regular database cleanups, limit plugins to essential ones, and use a caching plugin to improve overall performance.

Why are my pages not indexing on Google after SEO optimization?

Check if robots.txt is blocking search engines or if noindex tags are present. Verify you don’t have a sitewide “noindex” setting active. Ensure content is unique and valuable, as Google may ignore thin content. Look for manual actions in Search Console and submit URLs directly for indexing. If it still doesn’t index after multiple attempts, your content may not meet quality thresholds.

What SEO tools can help detect technical and on-page issues?

Screaming Frog excels at finding technical issues like broken links and duplicate titles. Semrush and Ahrefs offer comprehensive site audits with competitive insights. Google’s own tools provide direct insights into how Google sees your site. Free tools like Lighthouse can identify performance, accessibility, and SEO issues affecting your rankings.

How does duplicate content impact SEO performance?

Duplicate content forces search engines to choose which version to rank, diluting link equity across multiple URLs. This often results in lower rankings for all versions. When widespread, search engines may crawl your site less frequently. For e-commerce sites with product variations, proper parameter handling in Search Console can prevent duplication while maintaining necessary pages.

Ridam Khare is an SEO strategist with 7+ years of experience specializing in AI-driven content creation. He helps businesses scale high-quality blogs that rank, engage, and convert.