This post explains vector search basics by breaking down its core components. It covers how text embedding models convert language into numeric vectors, describes semantic vector spaces and their role in improving search intent, and examines nearest search methods. Readers gain clear insights into modern search techniques.

What is vector search?

Vector search is an advanced method to retrieve data by converting text and content into numeric vectors. This approach not only represents the essence and context but also goes beyond simple keyword matching. It allows faster outcomes and more relevant search results in complex, multidimensional databases, improving both user satisfaction and how well they work across industries globally.

How does vector search differ from keyword search?

Vector search contrasts with keyword search by using numeric forms instead of solely relying on exact word matches. Traditional methods depend on building inverted indexes and employing preset rules, whereas vector search relies on deep learning models to measure semantic similarity. This allows search systems to understand underlying intent and return in context relevant results more quickly.

“Unlike keyword search, which relies on exact matches, vector search enables semantic retrieval — you can find what you mean, not just what you type.”

— The Gradient Flow Podcast

Where did vector search originate from?

The origins of vector search trace back to early text form techniques, evolving from bag-of-words models and TF-IDF weighting to latent semantic indexing. Later, methods such as word2vec and modern transformer models advanced vector forms, laying a base for today’s AI-driven vector search applications that set the stage for digital new ideas globally.

Why is vector search growing in importance today?

Vector search is increasingly crucial due to its ability to represent nuanced user intent while providing tailored outcomes. Enhanced by AI, it boosts e-commerce conversions, improves the features of chatbots, and scales efficiently for large datasets. As online searches rely more on semantics rather than mere keywords, businesses adopt vector search to exceed old methods significantly.

How text embedding models power vector search

Text embedding models enable modern search by converting language into multidimensional vectors. These models represent the subtle meaning underlying text, allowing search systems to understand context deeply. They power semantic comparisons, effectively connecting the gap between surface keywords and conceptual queries, and play a crucial role in improving retrieval precision across a variety of industries.

What are text embedding models in simple terms?

Text embedding models simplify language by converting words and phrases into numeric vectors in a multidimensional space. They allow computers to understand relationships between words with math, making semantic connections more apparent. Models like text-embedding-ada-002 help search engines grasp meaning beyond surface words, making sure that results are precise and context-aware consistently.

How vector representation of text makes semantic understanding possible

Vector form converts text into similar numeric forms, bridging shape gaps between surface words and their underlying meanings. This process allows search engines to measure likeness using algorithms such as cosine distance. By mapping text into constant vector spaces, systems retrieve related content more correctly and reflect true intent across varied queries.

What role do embedding models play in semantic precision?

Embedding models improve semantic precision by converting unstructured text into structured data arrays. They represent word nuances and context, reducing vagueness in complex search queries. Advanced models map connections among terms fully, leading to more accurate and relevant results for complex searches and making sure that systems deliver outcomes that truly match user intent.

Explore the role of semantic vector space

Semantic vector space arranges data in a multidimensional grid system, which allows similarity comparisons well beyond simple keywords. It provides a structural framework to assess relationships between concepts and concepts. This approach underlies advanced semantic search, allowing systems to rank results based on context relevance and intrinsic meaning while enabling search speed across diverse uses globally.

What is a semantic vector space?

A semantic vector space maps words and phrases into a constant layout where similar concepts simply lie close together. It converts text into numeric form, helping computations that focus on meaning rather than just surface form. This space models contexts and term connections, aiding in precise retrieval across varied search cases despite unclear phrasing for improved precision.

How does mapping help find related content more accurately?

Mapping text into a vector space allows systems to compare the complex relationships between items. By measuring distances with metrics like cosine likeness, search engines find content linked by related meaning even if different words are used. This technique improves search precision by finding underlying connections quickly, thereby making sure strong and targeted content finding across platforms.

In what ways does semantic space boost search intent detection?

Semantic space improves search intent detection by grouping related terms and concepts that help systems understand user queries more intuitively. It shows subtle connections and context details that old keyword methods often overlook. This detailed alignment enables more accurate readings of search needs and provides results that align with user queries across a variety of applications.

Understanding nearest neighbor search in practice

Nearest neighbor search finds the data points closest to a given query in a vector space, playing a key role in practical vector search applications. It relies on precise distance metrics to measure similarity between vectors. This method supports various real-world systems—from recommendation engines to image recognition tools—enabling faster, more accurate retrieval on a global scale.

How is nearest neighbor search used in vector search?

Nearest neighbor search in vector systems finds data points with minimal distances to a query vector. This method, often run via algorithms like HNSW, plays a crucial role in retrieving semantically similar information rapidly. It allows scalable and efficient searches by comparing each query against vast, multidimensional datasets for real-time applications (Microsoft Learn).

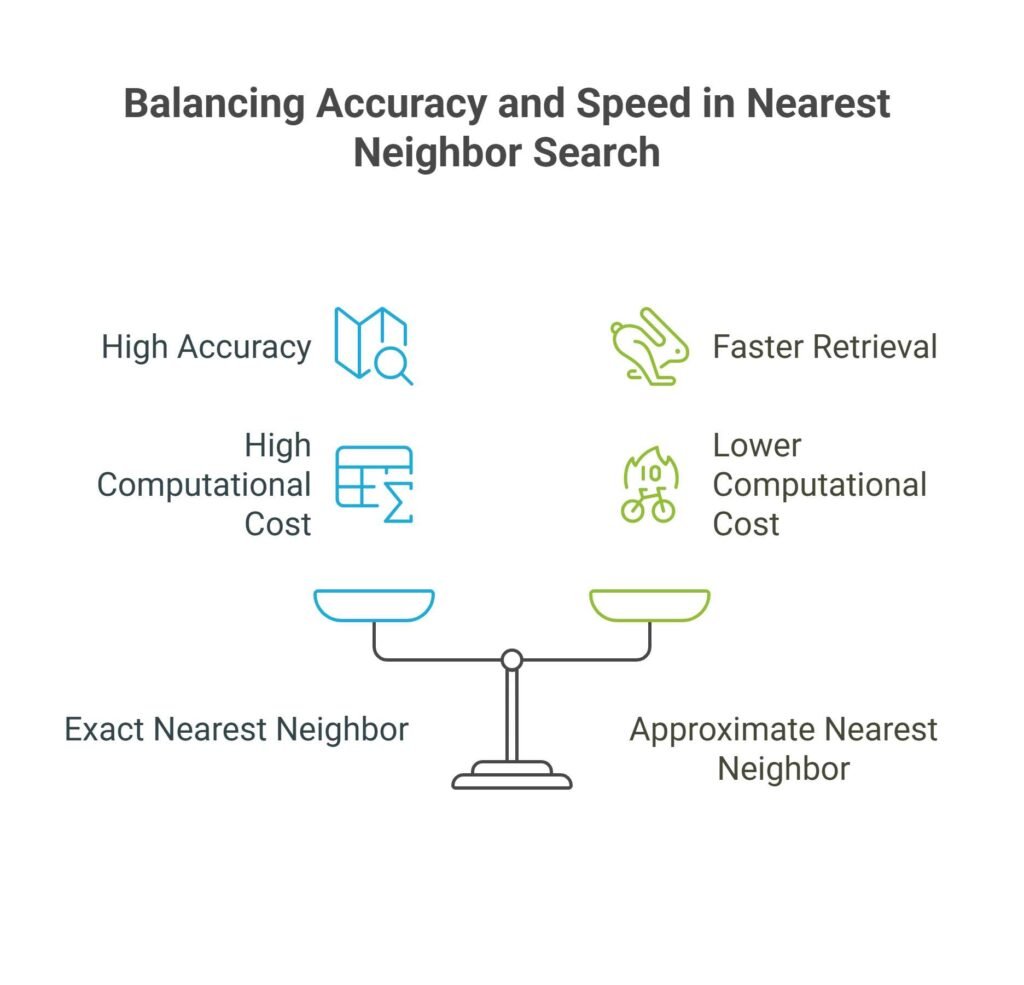

What is the difference between exact and approximate nearest neighbor search?

Exact nearest neighbor search computes distances across every vector to achieve best accuracy, but it often becomes slow on massive datasets. In contrast, rough search speeds up retrieval by checking only a subset of relevant vectors, trading off some precision for efficiency. Methods such as HNSW balance these factors skillfully, making sure that the results remain enough accurate for practical use (ClickHouse Docs).

Where do we see nearest neighbor search in real platforms?

Nearest neighbor search appears in numerous applications including recommendation engines, image retrieval systems, and various machine learning tasks. Its combining spans from tech giants to startups, using advanced data structures for improved efficiency. This method remains basic in fields such as computer vision and natural language processing, where its use across many industries is essential (Wikipedia).

How semantic search algorithms improve results

Semantic search algorithms interpret user intent by transforming input queries into vectors and aligning them with content forms. They integrate natural language models to represent context and nuanced meanings that traditional search methods often miss. These algorithms improve result ranking, offering users a richer and more accurate search experience through smart data processing.

What are semantic search algorithms?

Semantic search algorithms use machine learning to decode the intent behind user queries. They convert input text into embeddings, compare these vectors using techniques like k-nearest neighbor, and rank documents based on contextual relevance. These systems continuously learn and improve over time, improving result quality and user happiness for modern search areas.

How do these algorithms process vector relations?

These algorithms assess vector relations by measuring distances between query and document embeddings. They use techniques such as cosine likeness and Euclidean distance to determine how closely items align in multidimensional space. By combining math precision with expert context modeling, these systems provide refined search outputs that effectively reflect the true connections between content pieces (Instaclustr).

What are the benefits of semantic-based versus keyword-based algorithms?

Semantic-based algorithms represent context and reveal conceptual meanings, offering nuanced outcomes that align better with user intent than keyword-based methods. While keyword-based approaches focus on exact term matches, they might miss the underlying context. In contrast, semantic search provides richer and more flexible results, especially for complex queries, thereby making it ideal for diverse, modern search uses.

Conclusion

Vector search is a powerful approach that uses AI along with advanced models to convert text into precise numeric vectors. This process improves search abilities by representing critical user intent and connecting related content within a multidimensional space. Text embedding models, semantic vector spaces, and nearest neighbor search work together, transforming old search methods into context-aware, precise systems. By comparing these vector distances, search engines provide outcomes that closely reflect the true meanings behind queries. Semantic search algorithms further improve these results by using machine learning to decode connections between words and phrases. As these tools continue to evolve, businesses benefit from quicker data retrieval, improved customer experiences, and scalable solutions. Adopting vector search and semantic techniques prepares groups to handle growing data challenges while keeping pace with modern search demands. These improvements set a new standard in digital information retrieval.

FAQ

What is the difference between vector search and semantic search algorithms?

Vector search retrieves data by matching numeric vectors that represent underlying meanings, while semantic search decodes query context to compare conceptual relationships. Both approaches improve final outcomes, yet differ in their underlying processing techniques.

How do text embedding models improve search accuracy?

Text embedding models convert language into vectors that represent underlying meanings and contextual details. This change allows search engines to compare content on a semantic level, thereby significantly boosting retrieval accuracy and relevance consistently.

Why is vector representation of text better for modern data search?

Vector form represents deep semantic connections well beyond mere keywords, allowing systems to understand both context and nuance. This method leads to more accurate search outcomes, particularly in complex, large-scale datasets, thereby enabling consistent user satisfaction globally.

Can nearest neighbor search be used outside of search engines?

Yes, nearest neighbor search finds uses beyond search engines. It is widely employed in recommendation systems, as well as image and speech recognition, and in anomaly detection where assessing similarity among data points is crucial across varied fields.

What are the current limitations of vector search?

Current problems include high computer costs, ability to grow challenges in very large datasets, and sometimes problems in fine-tuning models for specific contexts. Ongoing research aims to address these issues while enhancing overall performance.

How does a semantic vector space change query intent detection?

A semantic vector space transforms query intent detection by allowing systems to assess subtle connections and contexts within queries. This results in improved search outputs that precisely match users’ underlying needs and provide more relevant information.

Ridam Khare is an SEO strategist with 7+ years of experience specializing in AI-driven content creation. He helps businesses scale high-quality blogs that rank, engage, and convert.