Ever wondered how websites and schools know if something was written by ChatGPT or a person? With AI writing tools becoming incredibly common, the need to tell apart human from machine has sparked a tech race. Behind every AI detector lies fascinating technology that hunts for subtle patterns in text that human eyes might miss.

The battle between AI creators and AI detectors gets more interesting every day. Let’s pull back the curtain on how these detection tools actually work, where they succeed, and where they stumble in identifying machine-written content.

“As AI gets more sophisticated, we knew we had a responsibility to bring a solution to market that can help anyone distinguish between human content and AI-generated text at such a fast rate.”

-Alon Yamin, CEO and Co-founder of Copyleaks

Follow the Words to Spot the Machine Behind Them

Why AI tends to sound confidently generic

AI writing has a particular “voice” that might not jump out at first glance. When you read AI-generated text, you’ll notice it sounds authoritative but often lacks personality. That’s because language models are trained to produce the most statistically likely next word rather than the most creative or unique one.

Think about it like this: AI plays it safe. It avoids the unusual word choices or quirky expressions that make human writing distinctive. Instead, it sticks to common phrases and predictable patterns that sound correct but generic.

- Human writers: Often inconsistent, personal, and willing to break conventions

- AI writers: Consistently fluent but predictable and lacking in distinct voice

This “statistical safeness” becomes a telltale sign that detectors can spot.

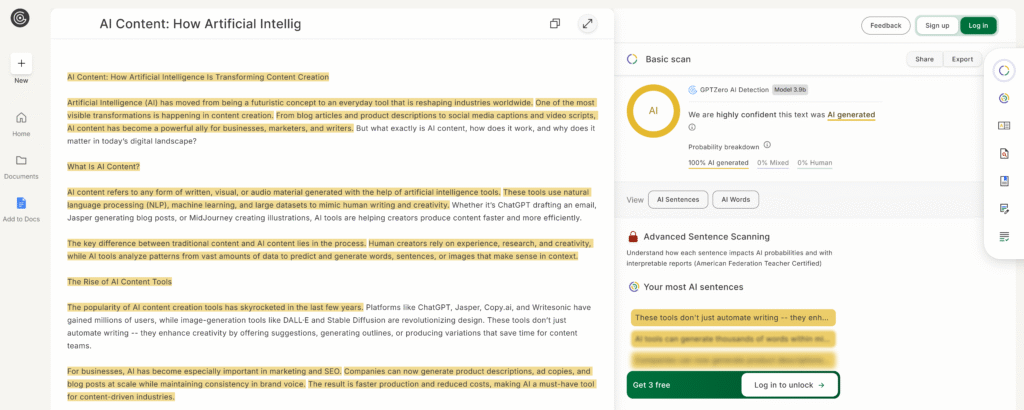

GPTZero AI Detection

How structure reveals non-human patterns

AI detectors use machine learning algorithms trained on massive datasets of both human and AI-written text to spot the difference between them. These systems break down language patterns in ways humans simply can’t perceive.

The detection technology analyzes how words, sentences, and paragraphs connect. AI-generated content often follows consistent structural patterns that, while grammatically correct, lack the natural variation humans unconsciously create.

For example, AI tools frequently:

- Maintain consistent paragraph lengths

- Use similar transition phrases repeatedly

- Create predictable sentence structures

- Follow patterns in how they introduce and develop ideas

These subtle patterns create a mathematical fingerprint that detection algorithms can identify.

Cracks that show up in unnatural syntax choices

The dead giveaways of AI writing often appear in two technical measures: perplexity and burstiness. Perplexity measures how unpredictable text is. Human writing tends to be more perplexing (higher perplexity), while AI text is more predictable (lower perplexity).

Burstiness refers to the variation in sentence structures and lengths. Humans naturally vary their writing with sudden bursts of short or complex sentences. AI text often lacks this natural variation, creating a rhythmic pattern that feels too perfect.

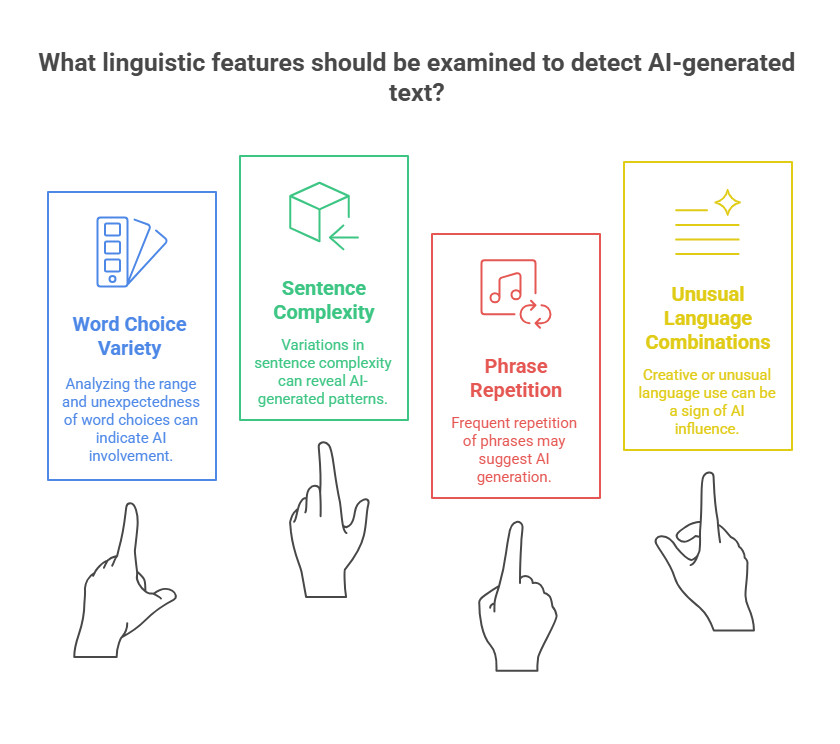

AI detectors examine these patterns and other linguistic features like:

- Word choice variety and unexpectedness

- Sentence complexity variations

- Phrase repetition frequencies

- Use of unusual or creative language combinations

Do AI Detectors Work or Just Make a Guess?

How weak signals still mislead top AI detection tools

Even the best AI detectors aren’t perfect. They operate in a constant cat-and-mouse game with AI writing tools, creating a technological arms race. Even the top detection systems can misclassify human writing as AI-generated up to 5% of the time.

When humans edit AI-generated text, detection becomes even harder. Simple changes like rewording sentences, adding personal examples, or introducing intentional errors can significantly reduce detection rates.

The fundamental challenge is that detectors must work with probability rather than certainty. They estimate the likelihood that content was AI-generated based on statistical patterns, which inherently means some texts will be wrongly classified.

Fine-tuning detection to reduce false positives

To improve accuracy, developers are constantly fine-tuning their detection algorithms. This involves several approaches:

| Detection Strategy | How It Works |

|---|---|

| Confidence thresholds | Setting minimum certainty levels before flagging content as AI-generated |

| Multi-model consensus | Using multiple detection models and requiring agreement |

| Human verification | Incorporating expert review for borderline cases |

The goal is balancing sensitivity (catching AI text) with specificity (not falsely flagging human writing). This balance is crucial in educational settings where false accusations of using AI could have serious consequences.

Surprising ways detectors over-rely on probability stats

AI detectors make judgments based on probability distributions rather than concrete evidence. This creates some unexpected blind spots:

- Creative human writers who use unusual structures may trigger false positives

- Non-native English speakers often get flagged incorrectly as their patterns differ from typical English writing

- Technical writing with specialized vocabulary can confuse detectors

- Short texts provide insufficient data for accurate detection

The most advanced AI models like GPT-4 are increasingly difficult to detect because they more closely mimic human variation in writing style.

Is AI Plagiarism If No Words Are Copied?

How idea originality is reshaped in the AI era

The concept of originality gets fuzzy with AI. When an AI system generates content based on millions of existing texts, is the output truly “original” even if it doesn’t directly copy any single source?

Traditional plagiarism meant copying specific words or ideas without attribution. But AI-generated content creates a new category: it produces “statistically likely” text based on patterns learned from human writing without directly copying any specific source.

This raises complex questions about:

- What constitutes original thought

- The value of human creativity versus statistical prediction

- Where to draw the line between inspiration and derivation

Why AI outputs lack true authorship and intent

AI systems don’t “understand” what they write in any meaningful sense. They lack:

- Personal experience to draw from

- Beliefs or values to express

- Intent or purpose behind their words

- Accountability for accuracy or impact

This fundamental difference from human authorship creates ethical questions about using AI-generated content in contexts where personal voice, accountability, or authentic expression matters.

Tracking origins in machine text is trickier than you think

Unlike traditional plagiarism where text might match a specific source, AI-generated content draws from countless sources in ways that make tracking nearly impossible.

AI detectors don’t actually identify the sources used to generate content. Instead, they analyze patterns that suggest machine generation.

Some AI systems now include watermarking technology that embeds invisible patterns into generated text, but these can often be removed through editing or paraphrasing.

Conclusion

AI detectors represent a fascinating technological response to the growth of AI writing tools. While they’re getting better at spotting machine-generated text, they remain imperfect tools that rely on probability rather than certainty. The balance between detection accuracy and false positives continues to evolve as both AI writing and detection technologies advance.

For students, writers, and content creators, understanding how these systems work offers valuable insight into both the current limitations and the ethical questions surrounding AI-generated content. As the line between human and machine writing grows thinner, our approach to concepts like originality, authenticity, and authorship will need to evolve as well.

Frequently Asked Questions

How do AI detectors work on different languages?

AI detectors are typically most accurate in English since they’re trained primarily on English datasets. Their effectiveness decreases with less common languages due to smaller training data. Some multilingual detectors exist, but they generally perform better on major languages like Spanish, French, and German than on languages with fewer speakers or different writing systems.

Are AI detectors accurate enough for grading or hiring?

Currently, AI detectors aren’t reliable enough to be the sole basis for high-stakes decisions like grading or hiring. With false positive rates of 5% or higher, they risk unfairly penalizing genuine human work. Best practice involves using them as screening tools followed by human judgment, especially when consequences are significant.

Is AI plagiarism if a tool rewrites your human draft?

Using AI to rewrite your own draft typically isn’t considered plagiarism, but it does raise questions about authorship. Many educational institutions and publishers now require disclosure when AI tools were used in the writing process. The ethical considerations depend heavily on the context and purpose of the writing.

Can AI detection wrongly flag original work?

Yes, false positives are a significant issue with AI detectors. Original human writing can be flagged as AI-generated, especially if it’s highly structured, uses common phrases, or follows conventional patterns. Creative writers, technical writers, and non-native English speakers face higher risks of false detection.

How often do AI detectors give false results?

Even leading AI detectors have error rates of 5-15%. False positives (human writing identified as AI) and false negatives (AI writing identified as human) both occur regularly. The accuracy varies based on text length, style, subject matter, and how much human editing has been applied to AI-generated content.

Can AI detection stop future misuse in academic writing?

AI detection alone won’t solve academic integrity concerns. As detection improves, so do methods to evade it. A more comprehensive approach combines detection technology with educational policies that embrace AI as a tool while teaching proper attribution and maintaining focus on learning outcomes rather than just final products.